When you're building a change data capture (CDC) pipeline with Postgres, one of the first decisions you'll make is which output plugin to use. These plugins determine how your database changes get formatted and delivered—whether you're replicating to another Postgres instance, streaming to Kafka, or delivering changes to a webhook.

But what exactly are these plugins, and how do they differ in practice? Let's dive in with a concrete example and explore your options.

A simple example: see an update flow end-to-end

To understand how different output plugins work, let's start with a simple scenario. We'll set up logical replication, make a change, and see how different plugins format that change.

Setting up logical replication

First, you need to enable logical replication in Postgres. You can read the Sequin docs to get specific instructions for Postgres providers like AWS, GCP, and Azure. But at a high level, you can run the command show wal_level; . If it’s not, you can follow these steps to enable it.

Create a table and replication slot

Create a table (if you don’t have one already) and create a publication and replication slot:

-- 1. Create a test table

CREATE TABLE users (

id SERIAL PRIMARY KEY,

name VARCHAR(255) NOT NULL,

email VARCHAR(255) NOT NULL,

created_at TIMESTAMP DEFAULT NOW()

);

-- 2. Set the replica identity to full for your table so all changes flow through:

alter table public.properties replica identity full;

-- 2. Insert initial data

INSERT INTO users (name, email) VALUES ('John Doe', 'john@example.com');

-- 3. Crate a publication

create publication cool_pub_name for all tables with (publish_via_partition_root = true);

-- 4. Create a logical replication slot with your chosen plugin

-- Start with test_decoding to get familiar:

SELECT pg_create_logical_replication_slot('test_slot', 'test_decoding');

Now make the change we want to track:

-- The change we're tracking

UPDATE users SET name = 'John Smith' WHERE id = 1;

Reading changes from the replication slot

To see the formatted output, you can read from your replication slot:

-- For test_decoding (simplest to read):

SELECT lsn, xid, data FROM pg_logical_slot_peek_changes('test_slot', NULL, NULL);

This simple update will look very diff erent depending on which output plugin you're using.

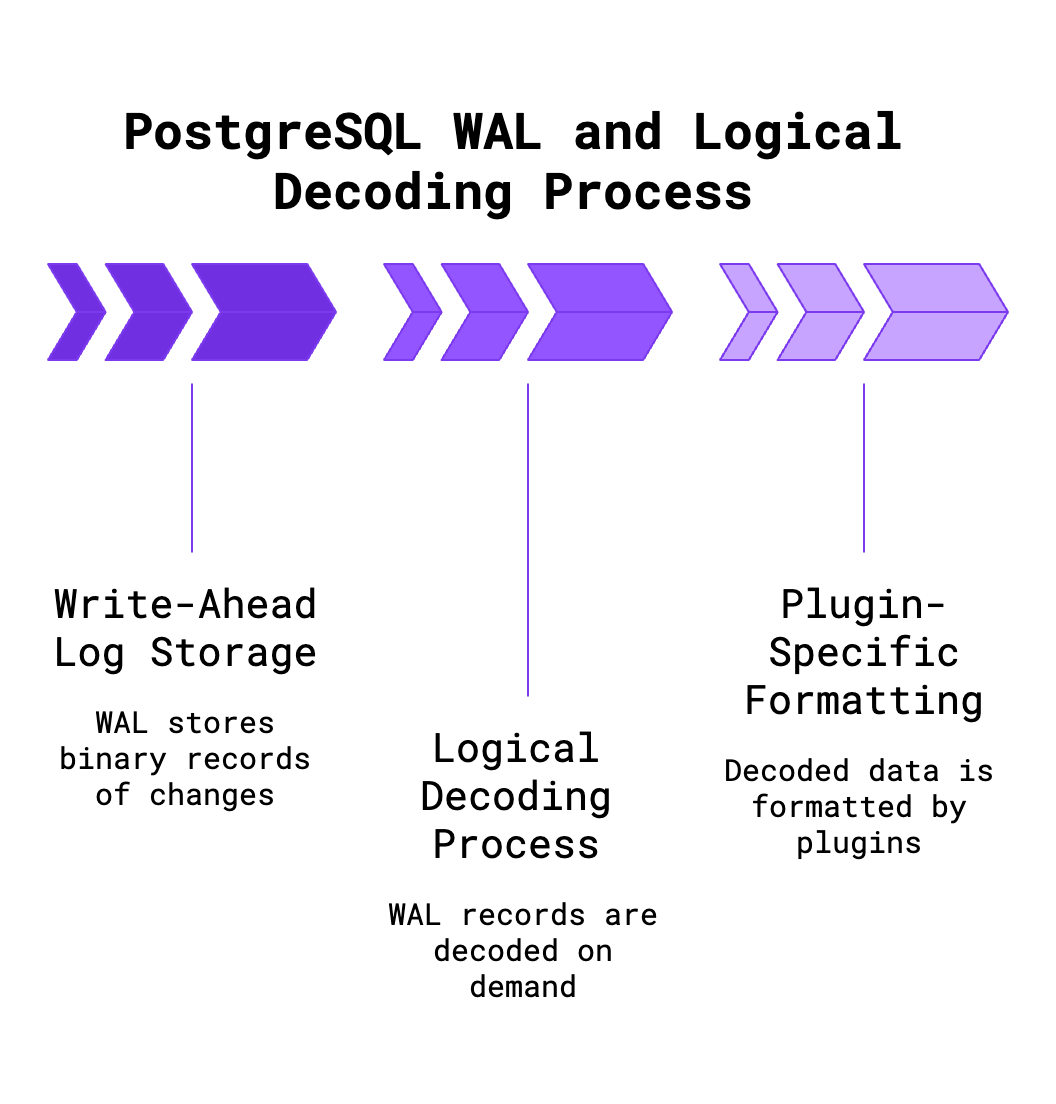

How changes Fflow from table to output plugin

When you executed that UPDATE statement, here's the journey your change took through Postgres to arrive in the replication slot:

1. Write-Ahead Log (WAL)

Postgres's WAL does not store pre-decoded, human-readable messages. Instead, it logs low-level binary records describing each change at the storage level. When our user name update happens, the WAL record looks something like this:

# What's actually in the WAL (simplified)

WAL Record: LSN 0/1A2B3C4

- Relation OID: 16384 (internal table identifier)

- Transaction ID: 12345

- Operation: UPDATE

- Block/offset: physical storage location

- Old tuple: [binary data for old row]

- New tuple: [binary data for new row]

At this stage the WAL only knows about internal identifiers and bytes – it does not contain table names, column names, or data in text form. Any readable output you see (e.g. JSON or text changes) is generated later by the decoding plugin – not stored in the WAL itself.

2. Logical decoding process

A logical replication slot streams changes by decoding WAL records on demand as a client consumes the slot. Here's what happens when you read from your slot:

- Postgres starts from your slot's position (LSN) and reads WAL records sequentially

- Looks up table metadata: The decoding process uses the WAL record's metadata (like the relation OID) to look up the corresponding table and column definitions in the system catalogs

- Transforms internal data: The binary tuple data gets converted into logical representation with actual table names, column names, and typed values

- Assembles complete transactions: Internally, the logical decoder assembles transactions in commit order; only after a transaction is fully decoded (and committed) will its changes be passed to the plugin

Importantly, this decoding happens at read time, not when the WAL is originally written.

3. Plugin-specific formatting

The output plugin takes the decoded change data and formats it according to its own rules. For our user update, every plugin receives the same structured information:

- Table name:

public.users - Operation:

UPDATE - New values:

{id: 1, name: "John Smith", email: "john@example.com"} - Old values:

{name: "John Doe"}(what changed)

Every logical decoding plugin receives the same core information about the change; what differs is how they output it. The test_decoding plugin formats this as human-readable text, wal2json converts it to JSON, and pgoutput encodes it in PostgreSQL's binary logical replication protocol.

This architecture allows PostgreSQL to support many output formats without changing the underlying WAL format or storing duplicate information. The core database only needs to log changes once in the WAL, and then any number of output plugins can decode those logs and present the data in JSON, SQL, binary, etc., as needed.

Built-in output plugins

PostgreSQL ships with two logical decoding plugins out of the box. These don't require any additional installations—they're ready to use on any Postgres 10+ server.

pgoutput

pgoutput is PostgreSQL's default plugin for logical replication. If you're using the built-in publish/subscribe system, you're already using this plugin behind the scenes.

What our user name update looks like:

# Binary protocol message (conceptual representation)

BEGIN LSN: 0/1A2B3C4

TABLE: public.users

UPDATE: id[integer]=1 name[text]='John Smith' (old: 'John Doe') email[text]='john@example.com'

COMMIT LSN: 0/1A2B3C4

The actual output is a binary protocol, so you can't just read it directly. Tools like Sequin or custom replication clients parse this format.

Trade-offs:

- ✅ Binary format is efficient and compact

- ✅ Handles complex PostgreSQL data types without losing information

- ✅ High performance with incremental streaming

- ✅ Production-ready and universally supported on managed services

- ✅ Used by PostgreSQL's native logical replication

- ❌ Requires special tools to read (can't just use SQL functions)

- ❌ Binary protocol is not human-readable for debugging

test_decoding

test_decoding is PostgreSQL's example plugin. It's mainly useful for understanding how logical decoding works or for quick debugging.

What our user name update looks like:

BEGIN 12345

table public.users: UPDATE: id[integer]:1 name[text]:'John Smith' email[text]:'john@example.com'

COMMIT 12345

Trade-offs:

- ✅ Text format is human-readable and easy to understand

- ✅ Great for learning how logical decoding works

- ✅ Included with PostgreSQL by default

- ✅ Useful for quick debugging of replication issues

- ❌ Output format is not designed for production parsing

- ❌ No advanced features like filtering or sophisticated type handling

- ❌ Limited functionality compared to other plugins

Popular third-party plugins

The PostgreSQL community has created several plugins for specific integration needs. Here's a high-level overview of the most common ones:

wal2json

wal2json outputs changes in JSON format, making it easy to work with in any programming language.

What our user name update looks like:

{

"change": [

{

"kind": "update",

"schema": "public",

"table": "users",

"columnnames": ["id", "name", "email", "created_at"],

"columnvalues": [1, "John Smith", "john@example.com", "2024-01-15T10:30:00"],

"oldkeys": {

"keynames": ["id"],

"keyvalues": [1]

},

"oldvalues": [1, "John Doe", "john@example.com", "2024-01-15T10:30:00"]

}

]

}

Trade-offs:

- ✅ Easy to parse in any language

- ✅ Human-readable format

- ✅ Supported on most managed services (RDS, Cloud SQL)

- ❌ Higher overhead than binary formats

- ❌ Can struggle with very large transactions

decoderbufs

decoderbufs uses Protocol Buffers for efficient binary serialization.

What our user name update looks like:

# Binary protobuf message (conceptual)

RowMessage {

transaction_id: 12345

table: "public.users"

op: UPDATE

new_tuple: {

columns: [

{name: "id", type: INTEGER, value: 1},

{name: "name", type: TEXT, value: "John Smith"},

{name: "email", type: TEXT, value: "john@example.com"}

]

}

old_tuple: {

columns: [

{name: "name", type: TEXT, value: "John Doe"}

]

}

}

Trade-offs:

- ✅ Very efficient binary format

- ✅ Schema-defined structure

- ✅ Great for high-throughput scenarios

- ❌ Requires building and installing

- ❌ Not available on most managed services

- ❌ More complex to work with

Specialized plugins

wal2mongo: Specifically designed for replicating to MongoDB, outputs MongoDB-compatible JSON operations.

decoder_raw: Outputs changes as raw SQL statements that you could execute on another database.

Choosing the right plugin

Your choice often comes down to a few practical factors:

Environment constraints

Managed services (AWS RDS, Google Cloud SQL, Azure): You're typically limited to pgoutput, test_decoding, and wal2json. Choose pgoutput for Postgres-to-Postgres replication or wal2json for external integrations.

Self-hosted: You have full flexibility. Consider decoderbufs for high-performance scenarios or stick with pgoutput for simplicity.

Performance requirements

For high-volume scenarios:

- pgoutput - Best overall choice for most use cases

- decoderbufs - If you need non-Postgres output and can manage the complexity

- wal2json - Convenient but can be a bottleneck at scale

Output plugin vs. final format

One common confusion: the output plugin format isn't necessarily the format your application sees.

For example, with Sequin:

- Debezium can use

pgoutputto receive binary data from Postgres - Then convert it to JSON for delivery to sinks

- Your application sees the JSON format, not the

pgoutputformat